Summary

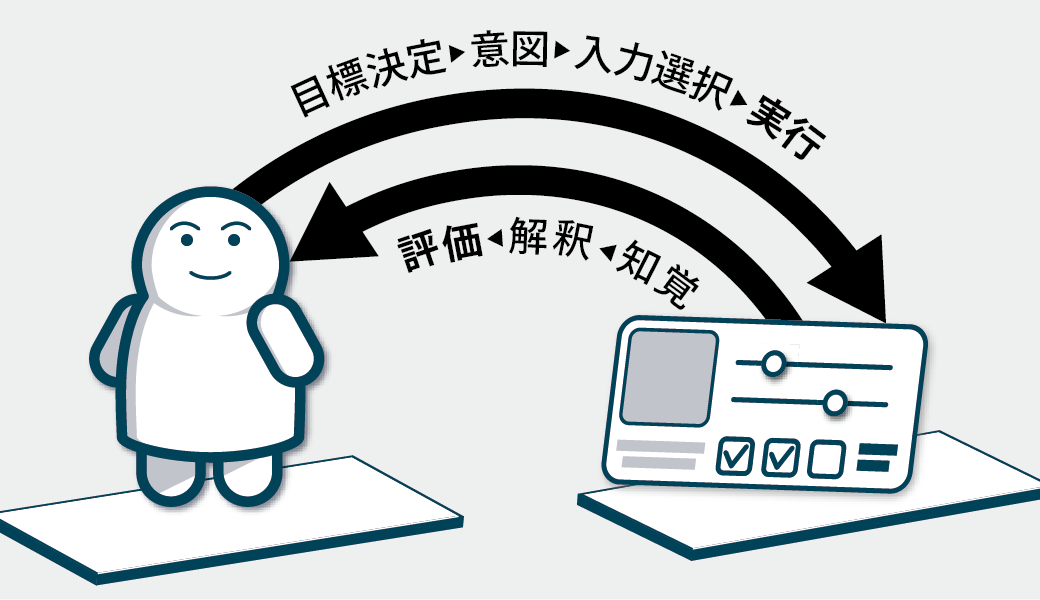

Live programming eliminates the gulf between code and execution. To design live programming systems, we first need to understand what we are developing. Once we gain domain-specific knowledge of the target applications, we create user interfaces to edit the running application, such as scrubbing sliders, color palettes, and timeline interfaces. The key to the fluid programming experience of live programming is the combination of deep understanding of the target applications and adoption of interactive user interfaces.

At the tutorial course as part of The 40th JSSST Annual Conference (Japanese), me and visual artist Baku Hashimoto served as lecturers, presenting a brief history of Live Programming research and providing a hands-on opportunity to develop a web-based Live Programming system.

Programming environments that focus on "liveness" have been around for a long time, like visual programming and Smalltalk-based systems, but they have recently attracted renewed attention as a key idea to realize a fluid programming experience. We attempted to review the situation from the point of view of both researchers and artists.

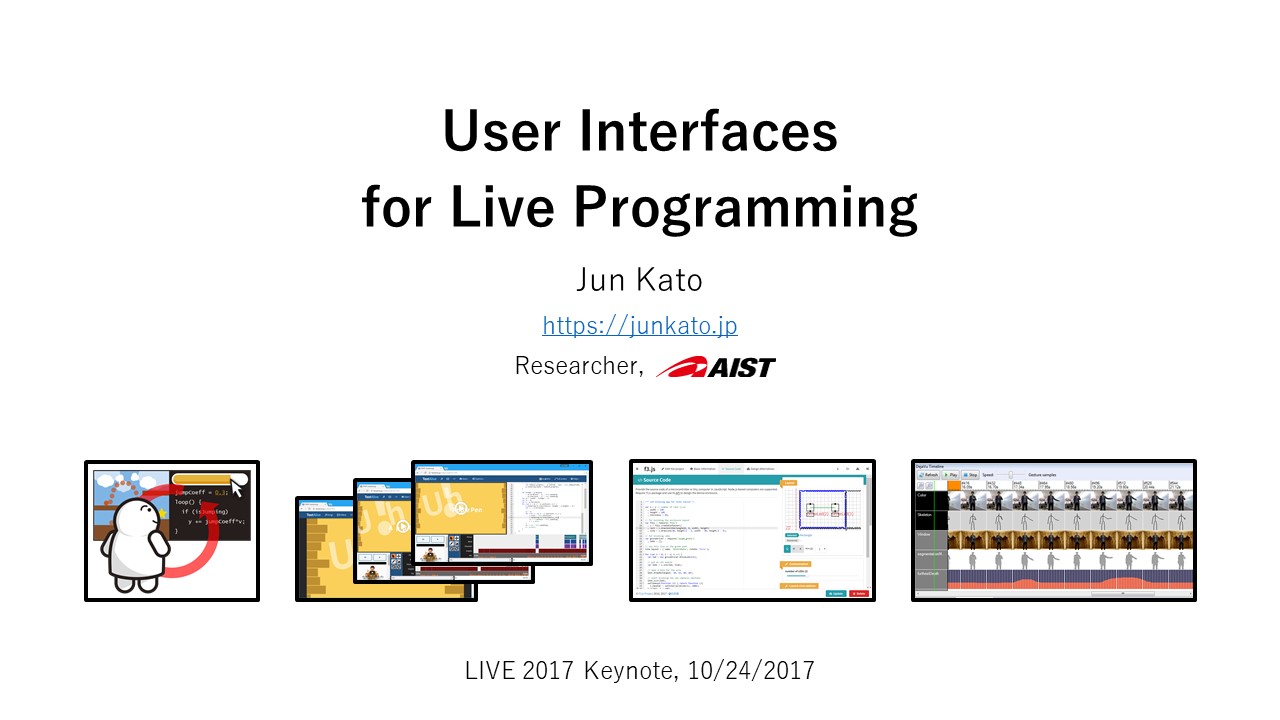

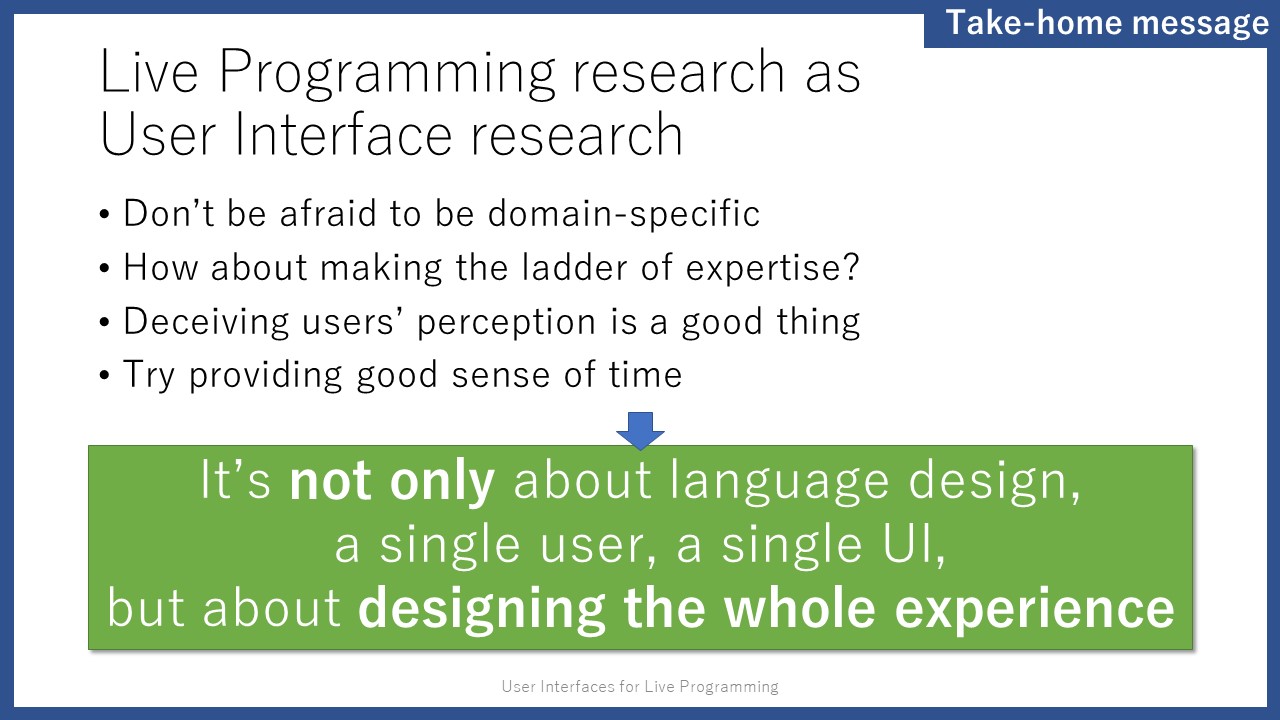

In the keynote talk at LIVE '17, I discussed the liveness of programming systems in light of user interface design. I introduced user interfaces for programming and discussed the importance of integrating graphical representations into programming environments. I extended the scope of "liveness" by describing two kinds of interactions, physical and developer-user, and provided insights on designing future live programming systems.

In particular, regarding the user interfaces for enhancing developer-user interactions, there are two sub-projects called Live Tuning and User-Generated Variables.

The 40th JSSST Annual Conference Tutorial

Presentation Slides (Japanese)

Introduction to Live Programming: Try It, Make It, Know It

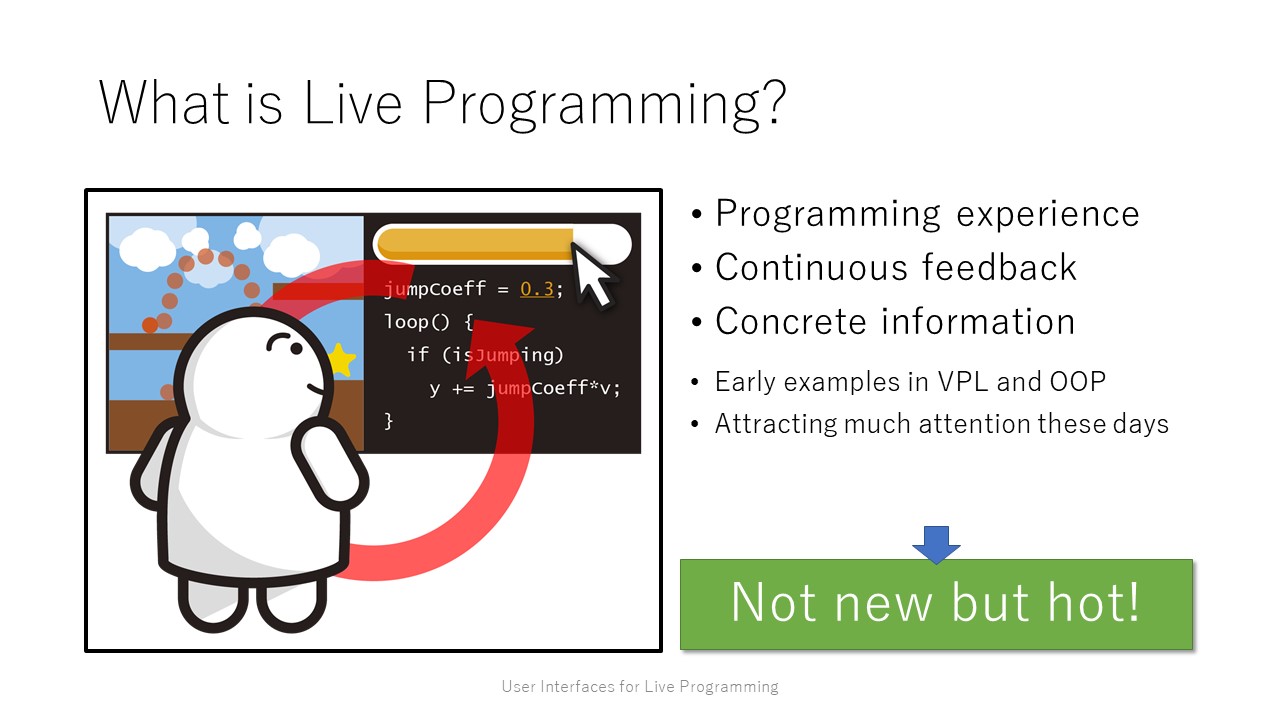

What is Live Programming?

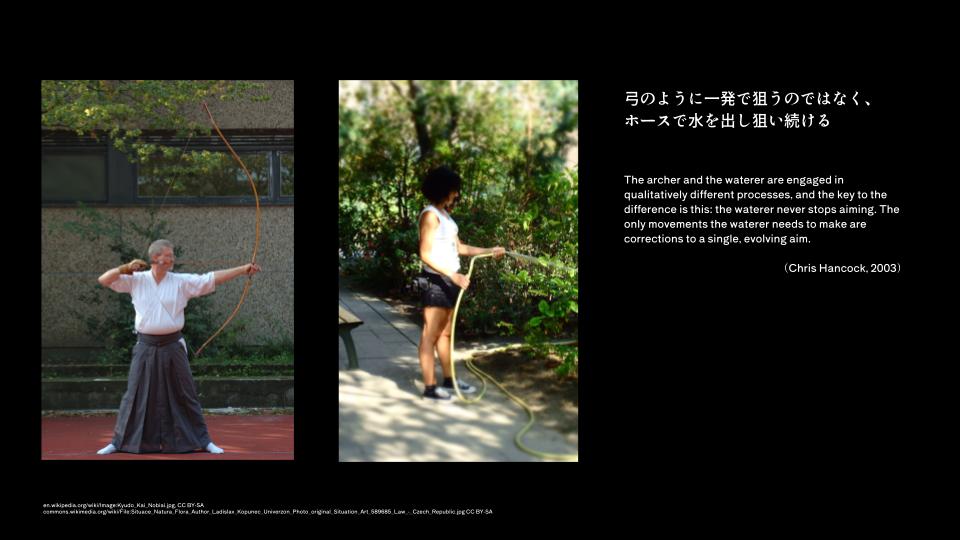

Live Programming has the clear advantage of not forcing programmers to bet on a single shot, a feature captured in Chris Hancook's dissertation by the metaphor of the bow and the watering hose.

This tutorial introduced the history of live programming research and examined its significance from different perspectives by reviewing related tools and practices proposed in research and used in visual art.

Technically speaking, the pursuit of "liveness" in programming systems reached a milestone in the 1990s, when many visual programming systems employing dataflow languages and environments, including VIVA, were researched and developed to allow interactive editing of programs, and a framework for user interface development in Smalltalk-based systems, Morphic, appeared. However, with the exception of Spreadsheet, visual programming did not become mainstream, and general-purpose object-oriented languages and integrated development environments, which were in some ways degraded from Smalltalk, gained popularity.

We had to wait until the 2010s to see a renewed interest in "liveness" in the programming experience, when Bret Victor's "Inventing on Principle" talk with many appealing demonstrations took place and the Workshop on Live Programming was held for the first time. Here I see "liveness" as a kind of user experience that can be achieved by building a ladder of abstraction from an abstract programming language to a concrete program execution state, and individual technical components such as a single programming language design or a single tool design do not seem to be sufficient. At the heart of the design of live programming systems is the design of the user interface, which is tailored to the domain-specificity of the applications to be developed in the programming system. From this perspective, visual programming environments for designers and tools for the visual arts have been successful for a long time, and more recently, there have been an increasing number of live programming systems specialized for diverse application domains.

For this tutorial, please also see the corresponding page on Scrapbox (Wiki site; Japanese) and the hands-on documentation on GitHub (Japanese). Also, for future perspectives not covered in depth in the tutorial, please refer to my keynote at LIVE 2017.

LIVE 2017 Keynote

Presentation Slides

User Interfaces for Live Programming

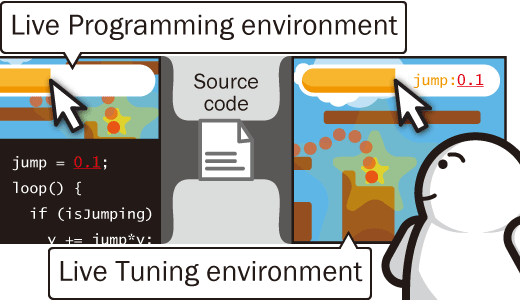

Live Programming systems provide concrete information about how the program behaves when it is executed. With help of this concrete information, programmers can easily iterate on editing and testing the program behavior.

Please note that the origin of Live Programming is not new as you can see early examples in visual programming and object-oriented programming environments. Then, why is it attracting much attention these days? In short, I consider it is because of its focus on the user experience.

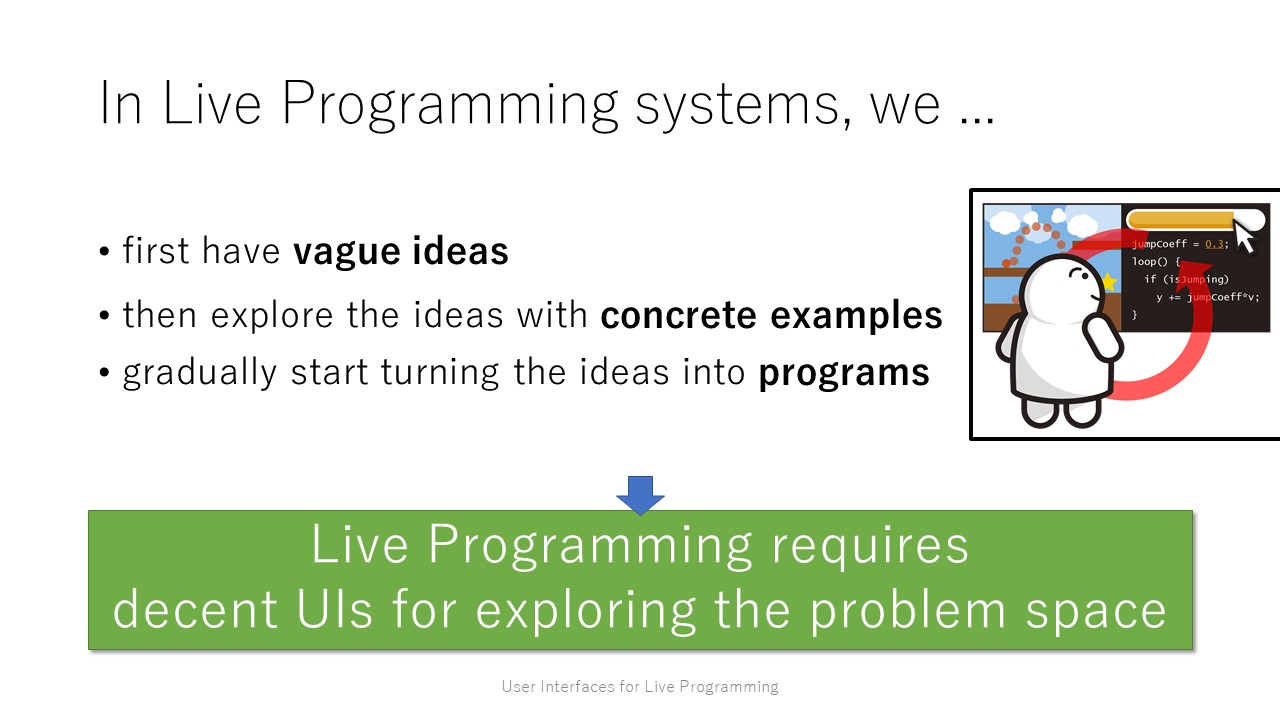

Live Programming requires decent user interfaces for exploring the problem space.

As such, building decent Live Programming systems requires deep understanding on the target application domains; e.g. avoid sudden changes in the program behavior, keep the program and its output relevant, and allow continuously exploring the problem space.

From now on, I will discuss three perspectives on user interface design of Live Programming systems and interesting directions to explore in each.

Programming with End-users

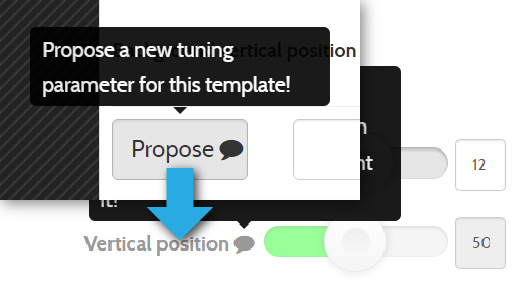

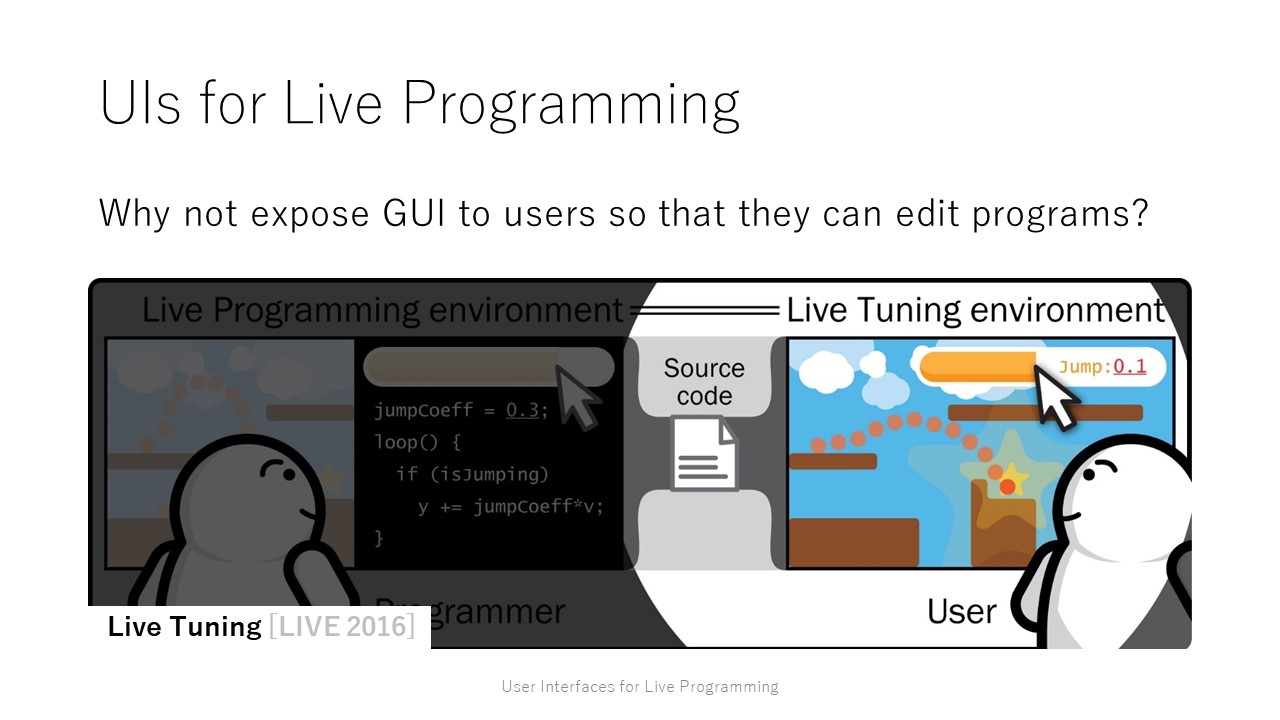

As Live Programming systems provide interactive and intuitive user interfaces for debugging and editing programs, some of the user interfaces can be exposed to the end-users so that they can customize the programs as they wish. [Live Tuning]

When the provided user interfaces cannot satisfy their needs, they can still submit requests to programmers with rich contextual information provided by the Live Programming systems. [User-Generated Variables]

Live Programming techniques benefit not only programmers, but also end-users, and enable Programming as Communication.

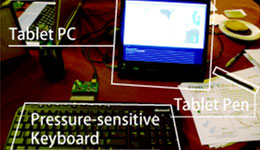

Programming this Material World

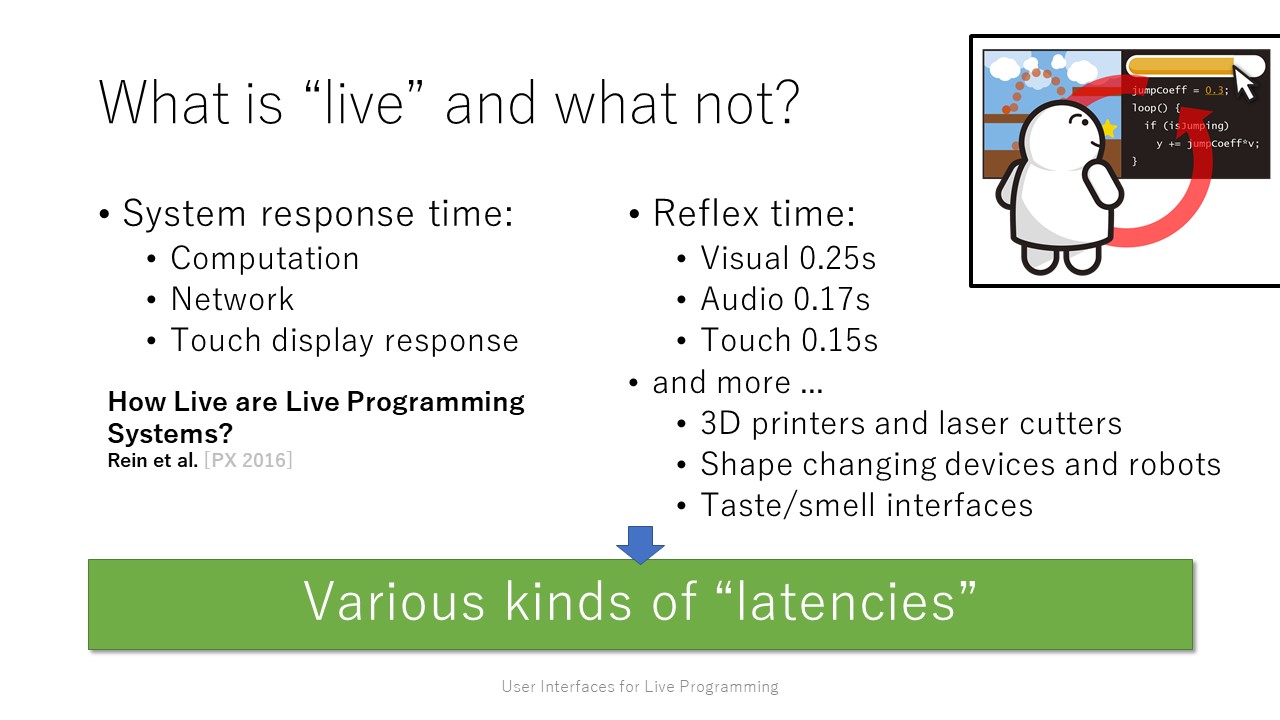

Live Programming often targets simple applications such as drawing graphics. In contrast, "software is eating the world" and there are complex and interesting applications such as physical computing and virtual reality.

Their actual "framerate" can be very slow; e.g. printing device enclosures and producing scent. Emulating, or sometimes even pretending, is needed to provide the continuous feedback.

Making full use of five senses in programming environments would be crucial in the near future.

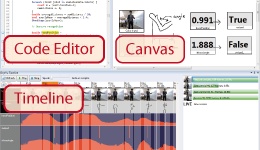

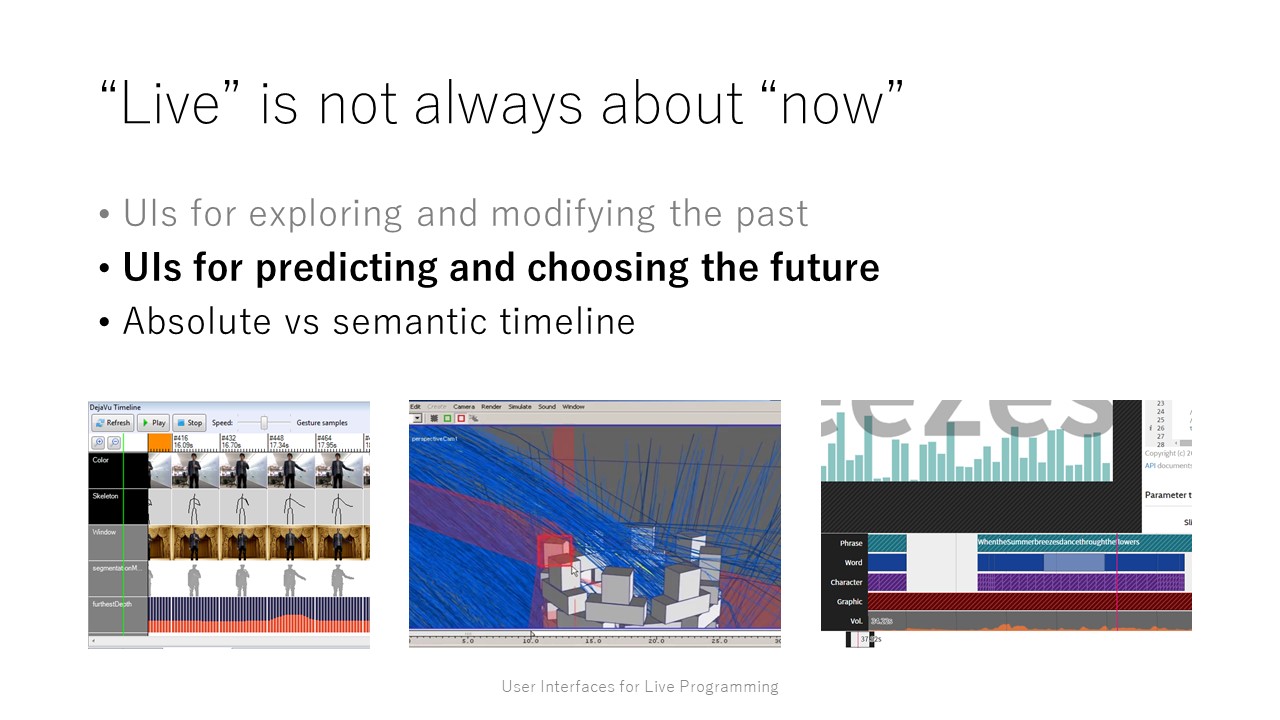

Programming with Time Travel

Live Programming puts focus on providing live feedback to the programmer, but it does not always mean providing realtime information of the running program.

The timeline interface and record and replay features together provide time traveling experience and play an important role to find critical timings in the history. Once the timing of interest is found, editing the code and program input would update the program output, allowing the programmer to explore future"s."

Designing Live Programming systems is not just about language design, a single user, a single UI, it is about designing the whole experience.

Live Programming is a very interdisciplinary topic and involves all of Programming Language design, Software Engineering, and designing Human-Computer Interaction. LIVE, PX workshops and SIGPX are such an attempt to attract people from all of these research fields.

Relevant projects

The following projects implement or discuss Live Programming experiences.

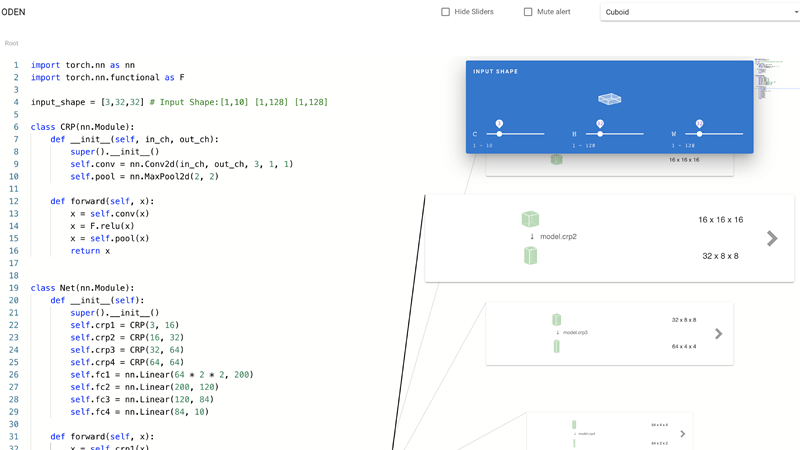

ODEN seamlessly supports the edit and experiment repetition in deep learning application development by allowing the user to construct the neural network (NN) with the live visualization and transits into experimentation to instantly train and test the NN architecture.

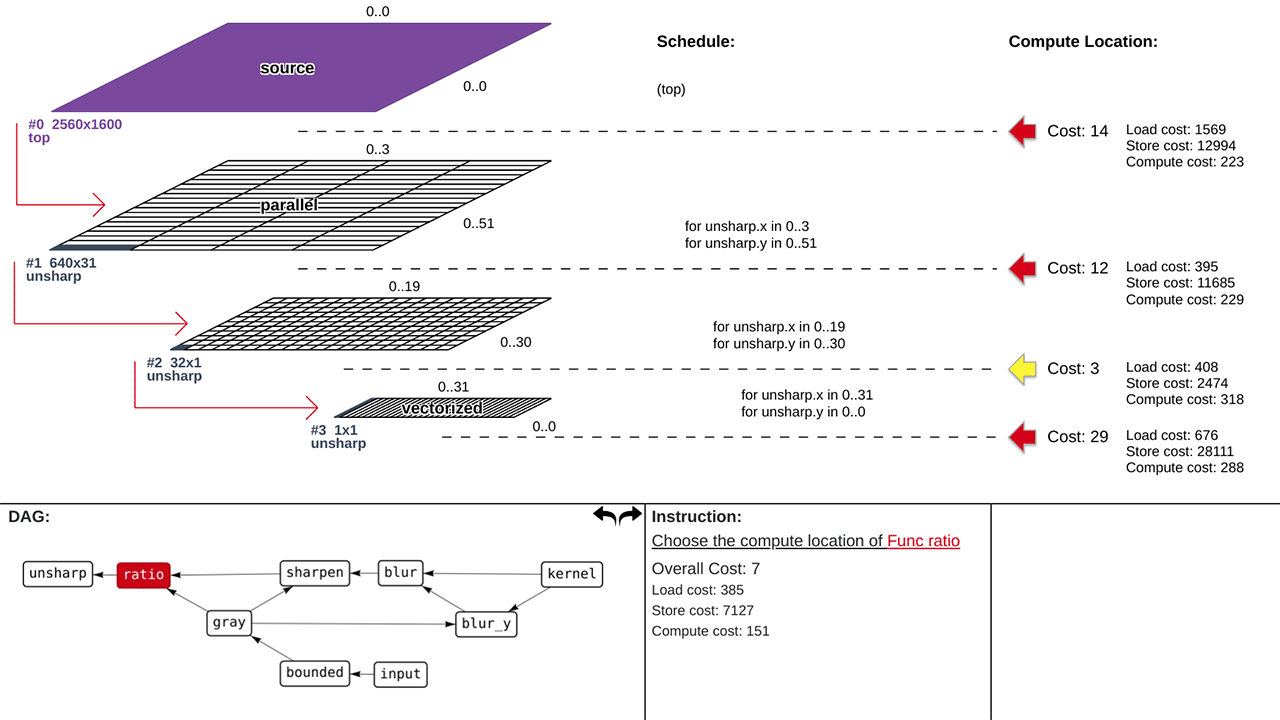

"Guided optimization" provides programmers a set of valid optimization options and interactive feedback about their current choices, which enables them to comprehend and efficiently optimize the image processing code without the time-consuming process of trial-and-error in traditional text editors.

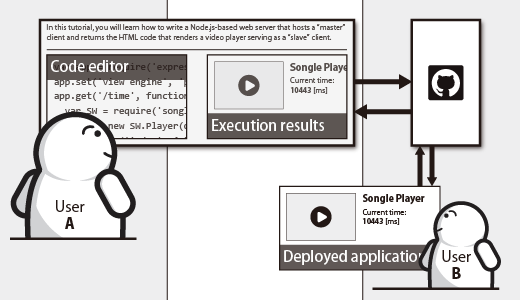

DeployGround is a framework for developing web-based tutorials that seamlessly connects the API playground and deployment target for kick-starting the application development.

Live programming eliminates the gulf between code and execution. User interface design plays the key role in providing live programming experience.

With appropriate user interface design, live programming can potentially benefit end-users, be used for applications whose computation takes a long time, and mean much more than merely providing real-time information of the running program.

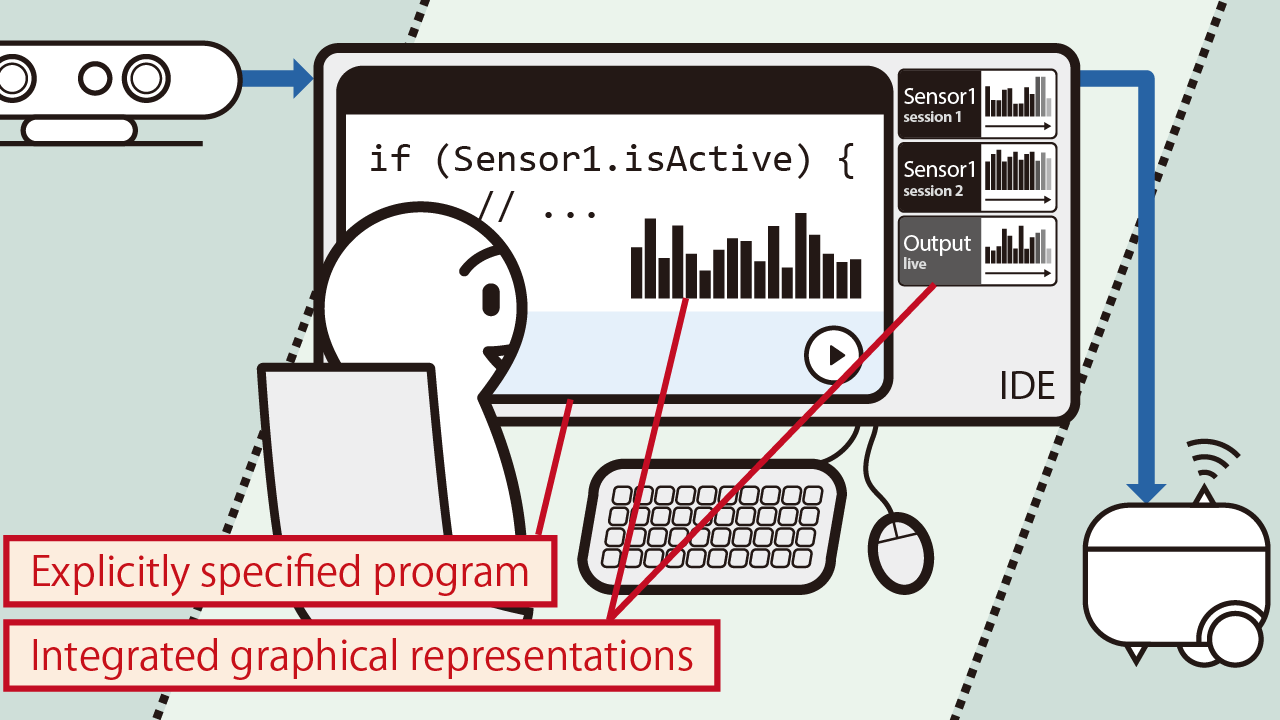

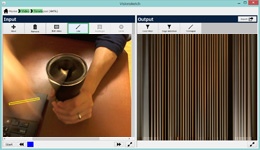

The programming-with-examples (PwE) workflow lets developers create interactive applications with the help of example data. It takes a general programming environment and adds dedicated user interfaces for visualizing and managing the data.

This is particularly useful in developing data-intensive applications such as physical computing, image processing, video authoring, machine learning, and others that require intensive parameter tuning.

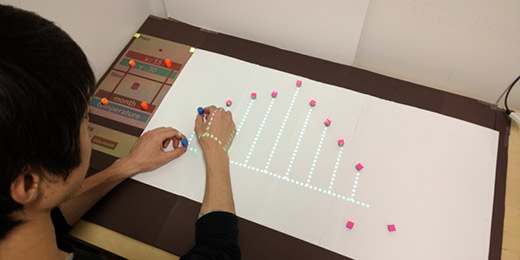

Reactile is an exploratory research project that investigates and proposes a new approach to programming Swarm UI by leveraging direct physical manipulation.

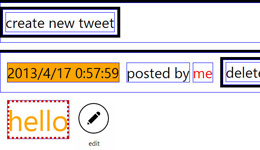

UGVs are variables generated by the end-users' requests and implemented (or discarded) by the programmers. The use of UGV helps both sending feature requests and their actual implementation.

Live Tuning is the subset of Live Programming interaction that only involves changes in constant values through parameter tuning interfaces. It expands Live Programming benefits to non-programmers.

A parametric design tool for physical computing devices for both interaction designers and end-users.

An integrated design environment for kinetic typography; desktop app revamped as a web service in 2015.

An IDE for example-centric programming of image processing applications, enabling fluid transition between abstract text-based coding and concrete direct manipulation.

An IDE for visual applications that shows interactive screencasts for replaying program executions and editing visual parameters.

The TouchDevelop IDE enables live programming for user interface programming by cleanly separating the rendering and non-rendering aspects of a UI program, allowing the display to be refreshed on a code change without restarting the program.

An IDE that eases the iterative development of interactive camera-based programs with support for monitoring, recording, reviewing, and reprocess temporal data

A "pressure-sensitive" programming environment with visual feedbacks of the built-in 2D physical simulator.

Top page

Top page